With slow, steady movements, the robotic arm writes on a piece of white paper that is lying on the table in front of it. It writes one letter at a time - but then suddenly something unexpected happens. The arm with the pencil attached to it stops writing, quickly reaches backwards well away from the paper and picks up another pencil. It then quietly continues with its work, as though nothing unusual had happened.

This scene is part of an experiment currently being carried out by Professor Dr. Myra Spiliopoulou, Professor of Business Informatics, and Professor Dr. Frank Ortmeier, Professor of Software Engineering, in the robotics lab in the Faculty of Computer Science at Otto von Guericke University. Their aim is to find out how people react to this situation. Does it make them feel surprised, amused or uncertain? In future experiments, video cameras will record the gestures and facial expressions of test subjects. In addition, sensors will measure their heartbeat and the electrodermal activity in their skin. The sensor technology will generate high-dimensional data flows, whose patterns Myra Spiliopoulou and her team will analyze using artificial intelligence (AI) in order to identify stress and tension. They hope to better understand what effect humans and robots have on one another and how the human reactions can be captured even without complex sensor technology on the body, for example through sound and video.

As intuitive and flexible as a human being

The experiments are the first steps in a major project in which other working groups from OVGU, Chemnitz University of Technology and TU Ilmenau are also participating. The three universities have been working closely together since 2008 in the Chemnitz-Ilmenau-Magdeburg (CHIM) research and innovation network. With “Productive Teaming” they are now looking to enter the Excellence Competition and together initiate a fundamental paradigm shift in industrial production. This is because this is no longer in keeping with the times, explains Professor Dr. Frank Ortmeier, Professor of Software Engineering at OVGU.

Prof. Ortmeier (Foto: Jana Dünnhaupt / Uni Magdeburg)

Prof. Ortmeier (Foto: Jana Dünnhaupt / Uni Magdeburg)

“Production lines, the like of which we see today in, for example, the automobile industry, are highly efficient,” he emphasizes. But the disadvantages associated with this form of automation are serious. “The fact that we are a throw-away society and do not have a circular economy is, among other things, due to our production processes,” says Frank Ortmeier. “It is often less costly to produce new machines than it is to repair them.” This uses up unnecessarily large amounts of resources and energy. Apart from this, human beings on the highly automated, static production lines - to exaggerate somewhat - have become slaves to the machines.

“Why can we not design machines in such a way that they live with us like human beings, intuitively and flexibly?” asks Frank Ortmeier, who is working on precisely this vision of “productive teaming”. At present, robots cannot understand if someone is unsure or tired, has back ache or needs to react to a change in the environment. The researchers hope to instill these capabilities in the machines.

Some of the foundations that will be needed are being laid by the researchers using the experiments with the writing robot, known as a cobot, which is equipped with numerous sensors in its articulations. With these it is able to react to touch and stop quickly, for example if it needs to avoid a collision. Nadia Schillreff works as a doctoral student in the robotics laboratory and explains what else sets this kind of collaborative robot apart from others. “It behaves in a similar manner to a human arm, has the same flexibility, with a shoulder, elbow and wrist.” Normally this kind of cobot, which is also able to grasp, lift and move things, works very quietly and sedately - unless, as is the case with the above-mentioned experiment, somebody programs it to carry out unexpected movements.

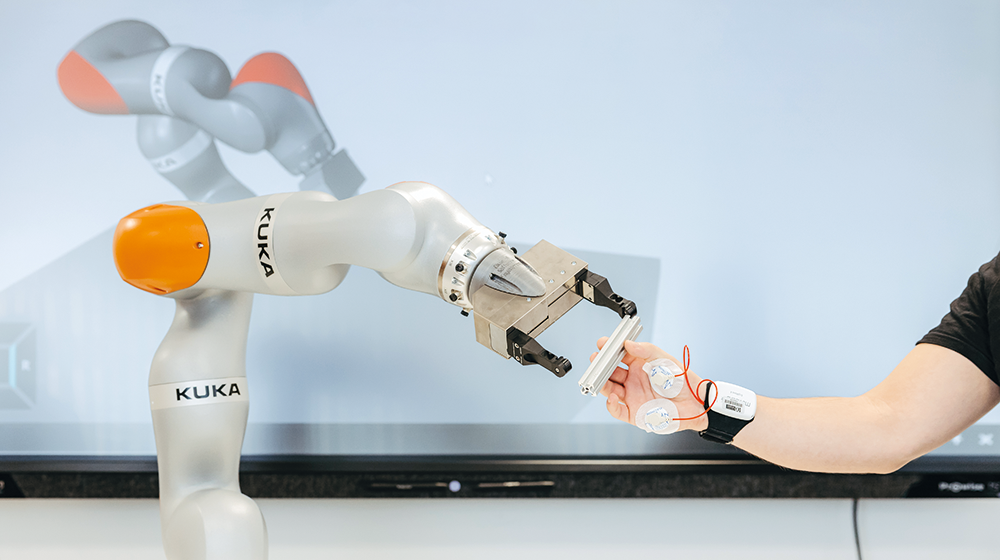

A 'cognitively augmented' robot works flexibly and adaptively together with a human on an assembly task. (Foto: Jana Dünnhaupt / Uni Magdeburg)

A 'cognitively augmented' robot works flexibly and adaptively together with a human on an assembly task. (Foto: Jana Dünnhaupt / Uni Magdeburg)

“If humans and machines are to cooperate, then the machine must know something about the intended aim, understand the actions of the person, and even participate in them,” says Myra Spiliopoulou by way of explanation of one of the biggest challenges for functioning “productive teaming”. If the pencil breaks or something falls, the robot needs to recognize what has happened, react to it and adapt. If a person is surprised, for example if the machine suddenly moves differently, then the machine should also recognize that and signal that everything is alright. For them to learn how to do this, new experiments, measuring techniques, AI methods and experimental data are needed, which are being developed and collected in Magdeburg, Chemnitz and Ilmenau.

Eyes and ears of the robot

For Professor Dr. Gunther Notni from TU Ilmenau, the sensor technology and image processing required are his area of focus. Along with his and other teams from TU Ilmenau, the physicist and metrologist is investigating in his laboratory - a virtually simulated production environment - how robots can learn with the help of sensor data to better understand human beings so that both can work together “like a real team”. “To this end, the robot needs to see the situation, assess it correctly, react quickly and also know what will happen next or what is needed,” explains Gunther Notni. In this situation, sensors are the eyes and ears of the robot.

The repertoire of the Ilmenau research team includes real-time 3D-sensors, thermal imaging cameras, equipment for measuring impulses in the brain, sensors for acoustic signals and even fiber sensors that are woven into clothing and record movement processes and patterns down to the very smallest detail. All this data needs to be evaluated using AI and provides an extensive picture of the nature of the workflows, the condition of the person and how the robot can adapt to it. Ultimately, the AI models, which will have been well trained using large quantities of experimental data, will be in a position to recognize all this information solely from the sensor data, which will be supplied by the sensors in the industrial production line of the future.

Wearable sensor for monitoring vital signs can be used with AI to recognize emotions. (Foto: Jana Dünnhaupt / Uni Magdeburg)

Wearable sensor for monitoring vital signs can be used with AI to recognize emotions. (Foto: Jana Dünnhaupt / Uni Magdeburg)

Gunther Notni sees the future of “productive teaming” as lying less in highly automated, standardized production lines, but instead anywhere where individual solutions are needed. It goes without saying that this applies to assembly, disassembly and repair, as well as to the craft sector and to construction sites where additionally there is a shortage of skilled workers. So will robots carry cement sacks, assemble furniture or lay tiles in future? “Things might move in this direction,” confirms the researcher.

Achieving optimum production efficiency and avoiding mistakes

At present, one of the research partners of the CHIM network, the Fraunhofer Institute for Machine Tools and Forming Technology (IWU) in Chemnitz, is working on another specific usage example. In this case for machine tools that heat up through friction and waste heat as they work. This reduces the quality of the parts produced. “The machine tools become less accurate,” explains Institute Director, Professor Dr. Steffen Ihlenfeldt. “And we are trying to reduce this imprecision through targeted cooling.”

However, the cooling requires energy and has a cost attached to it. The required time and location for cooling is decided by the experienced personnel operating the machinery. Using sensor technology, the experience of workers and self-learning AI models, Steffen Ihlenfeldt and his team are developing interactive tools that can evaluate data in real time and ensure that heating and cooling are dovetailed to optimum effect. “Anywhere where metal parts need to be produced with a high degree of precision in small batches - for example in aviation or toolmaking - in future this system will be able to support the person using the machine, prevent waste and produce using the minimum of energy and resources,” explains Steffen Ihlenfeldt.

“In five to ten years,” estimates Frank Ortmeier, “it could be possible to implement ‘productive teaming’. “But,” he stresses, “it is also a question of political will.” In his view, a paradigm shift must take place in society first. One of the most important milestones along the way is acceptance, which also needs to be driven forwards through research. In a few years, or so the scientists hope, the CHIM network, together with around 30 other universities will be part of an international center for “Productive Teaming”, which together will absorb and make use of data sets, exchange AI models, develop usage scenarios and communicate with society on the subject.